Have a read of Part 1 here if you are stumbling across this article first. The reason I built this cluster of Raspberry Pi’s is to learn. And I can say from the start I have learned a few things. If you take a quick historical look at computing, it started with procedural coding paradigms. For example, BASIC and COBOL programming languages executed the code line after line, serially. Then, there was a shift to Object Oriented Programming (OOP) models found in languages like C++, Java and my favorite C#. OOP has to do with the ability to reuse code, because in the procedural era you would need to cut and paste code from one program to another, if there was a bug or enhancement, you had to update to code in all the places you implemented it. Not so in OOP, you code it once and then you can reuse, inherit or polymorph it. My expertise lies in the OOP times, but I would guess that the computers on which procedural code, like OOP code does benefit from running on multiple processors, aka cores. Have a look at the following figure.

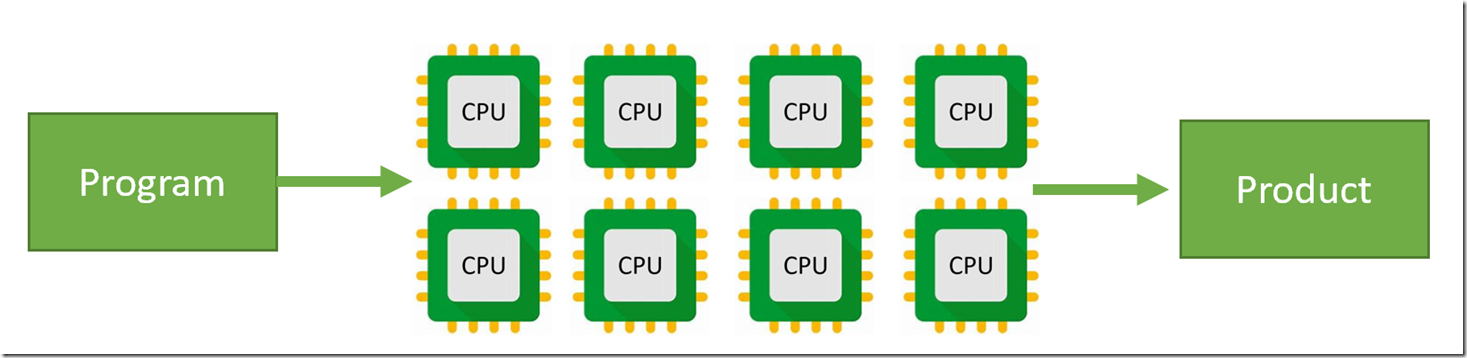

When you run a program written in C# on a server or workstation it runs on the CPUs and consumes the memory which are available on that physical machine. When the program is executed once, it will run on a single CPU. If the program is executed a second time, while the first execution is still running, in all probability that second invocation will run on a different CPU. That decision is determined by the operating system and the Kernel. But, it is possible for a programmer to instruct the Kernel to run the program on multiple CPUs instead of pinning it to a single one. This is commonly referred to as parallel processing or multi-threading. It is one of the more complicated areas of coding, but so is cluster computing, both of which are book and PhD worthy. So running in the OOP world of programs, the execution of the logic is bound to a single physical machine and in many cases to a single CPU.

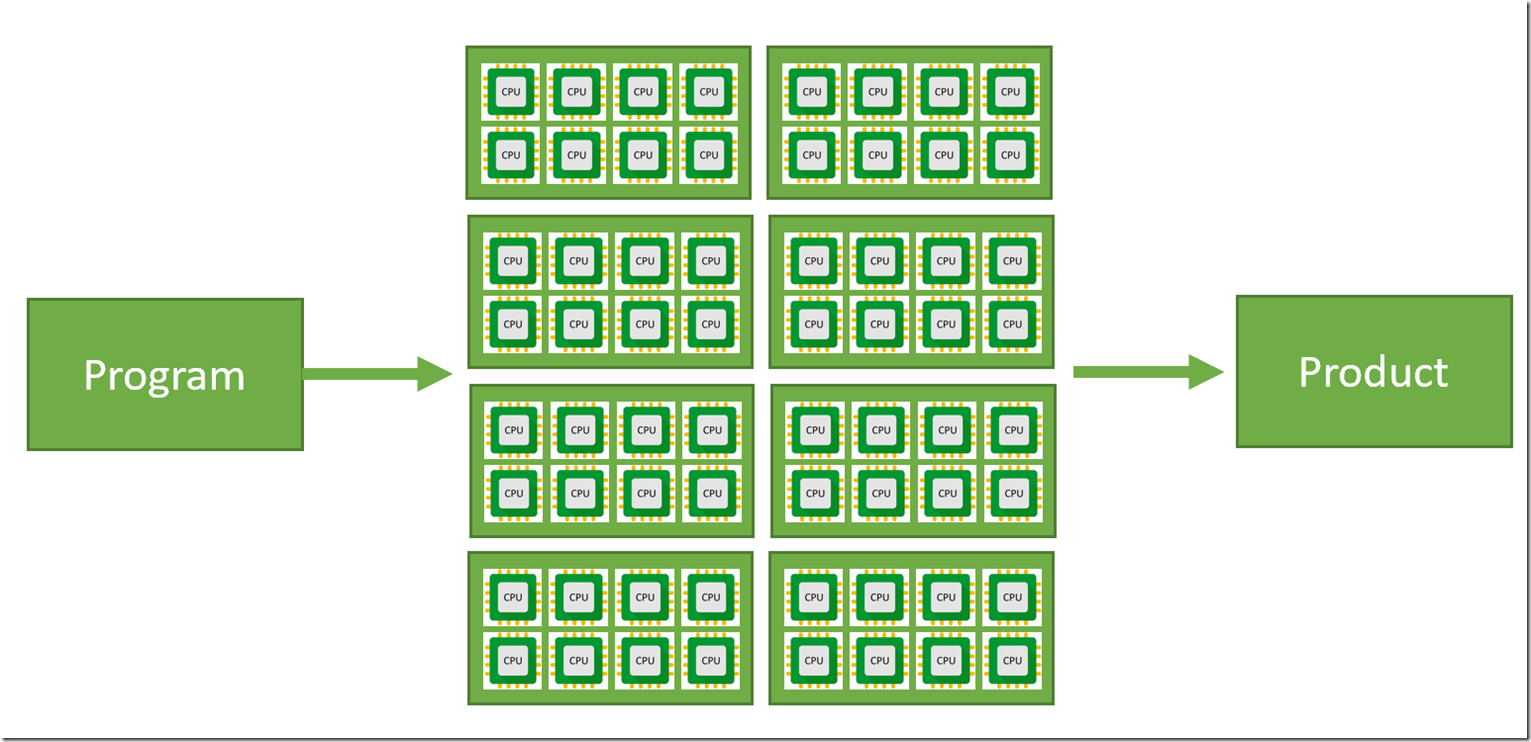

One reason we are working on this project is because I want to learn how it differs from what I know about procedural and OOP programming paradigms. I am still learning but I will take a stab at it with this image.

You can already make some conclusions just by visually seeing the increase in the number of CPUs. In my exercise we are using 8 nodes. The questions are:

- How do I write a program so that it can be run across multiple nodes?

- Can it be written to benefit from all the CPUs on the node?

- How do you execute such a program and from where?

- How do you merge all the results together at the end to get a result?

- How can you check to make sure all the parts ran and completed successfully?

Those are just a few questions I want to answer and I am certain they will generate many more questions. This is why I am doing building this cluster of Raspberry Pi’s. I am not 100% but I think this is the model used with Big Data and what is called High Performance Computing (HPC). We’ll see.

Thoughts about this so far

One of the nodes in the cluster doesn’t boot up sometimes. I have no idea why and it is very time consuming to get it connected up and debugged. In a cluster of only 8 nodes, this is cumbersome, in a real super computer scenario where you have 1000’s of nodes, I wonder how they manage that…

I find that I need to boot each node, one after the other. If I simply hit the power switch and turn them all on at the same time, there seems to be some issues with them getting an IP address and getting connected to the network so they can be used to execute my program. I cannot imagine in the real scenario where there are 1000’s of nodes that this the method used. But it is the only means I have found to make sure they all get allocated an IP.

The creation of the images which I use to boot machines with are identical. But sometimes the writing of them fails, aka is corrupted, and I only realize that when I try to book one of the nodes using it. I find that I need to create the image over and over again until I finally get one that boots. This is VERY time consuming. I am not using the highest specification of hardware, perhaps this might improve the situation.

I might also have some problem with my power supply. I am not 100% sure because I am just getting started, but it seems like the 7th and 8th node have the most troubles coming online. I have booted up the cluster many times, booting the nodes in different order and it is mostly the last ones. Which also makes me think maybe it’s not the image creation which is the problem (which I mentioned earlier) instead it is a power supply issue.

It is common knowledge that if you simply unplug a computer, that there is a chance you have a problem the next time you boot it up. Since I shutdown my cluster after I finish I need to find out a way to make sure they have all shutdown before I power off. I can probably script that, but I had to learn that. It’s an example of something I learned. When the node didn’t come up and I jumped through all the hoops to get to the console, I say this error “Cannot open access to console. The root account is locked see sulogin(8) man page for more details.” It required that I press Enter, so the boot hung there permanent when booting from a headless system.

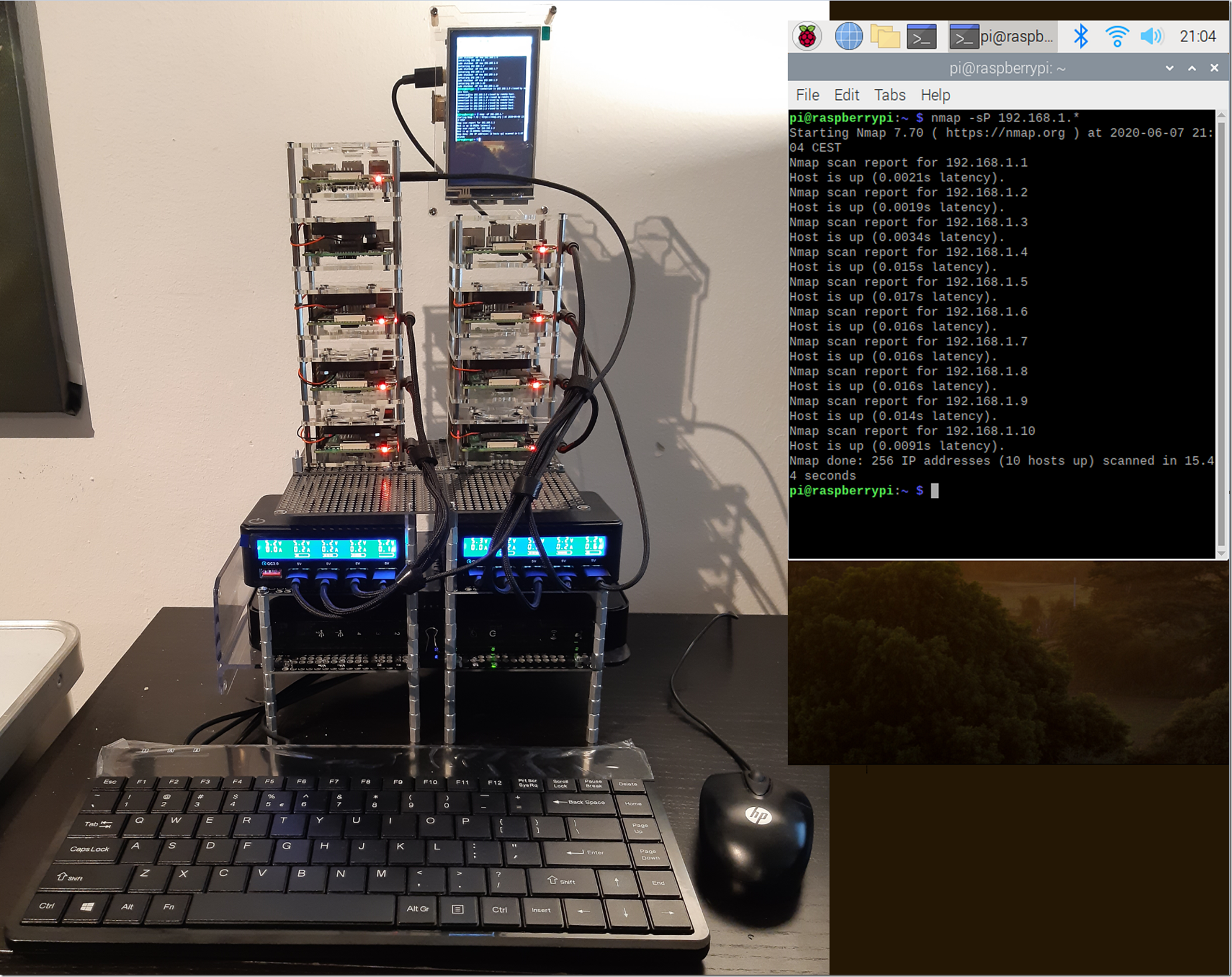

The last one for now is accessing the node when something isn’t right. I have my client console where I manage the nodes, so long as they are available, online and connected. But sometimes I do not see them in the list, I don’t see their IP address so I cannot SSH to them. I have to manually connect a monitor, mouse and keyboard, and often times reboot…

I hope that I can get this to work consistently so that I can rely on the hardware to work as expected. Only then can I get into the coding aspects and rock! Here is a picture of my cluster so far, I have few more enhancements to make before I consider it complete, and I have lots of coding ahead of me.