A very critical part of implementing a Generative AI solution that utilizes Retrieval Augmented Generation (RAG) like Microsoft Copilot is the discovery of the most relevant grounding documents. These most relevant grounding documents are then passed to the LLM for use in generating the NLP response. For example, if you want to find out how to solve an HTTP 503 status code, a valid tokenized user prompt would be something like “Solve HTTP 503 status”. To improve the relevance score of my documentation I tried testing how the search score is influenced if I include a summary of the document within the document itself. To do this I decided to use the Microsoft Azure AI Language service. I first created the Language service.

Figure 1, Azure AI services | Language service

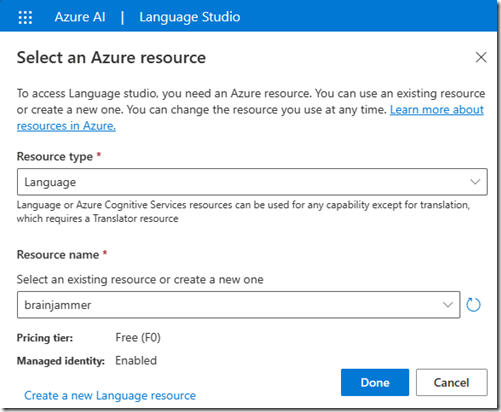

This service is what is used to manage the consumption and features available to the provisioned service. This service is used, and is required to configure and work within the Azure Language Studio. You can access Azure Language Studio here. You can see that my Language service is requested as the Resource name in Figure 2 when I first enter Azure Language Studio .

Figure 2, Azure Language Studio

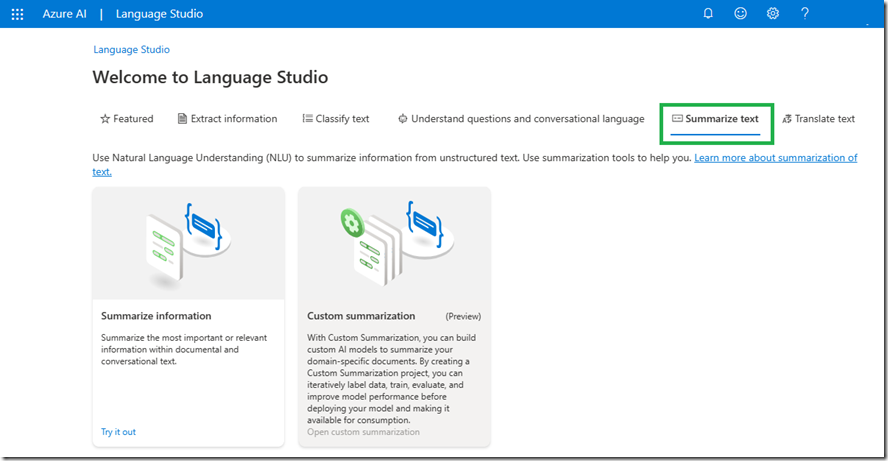

Then I navigate to the Summarize text tab and utilize the Summarize information feature, by clicking on the tile, as shown n Figure 3.

Figure 3, Azure Language Studio, Summarize text, Summarize information

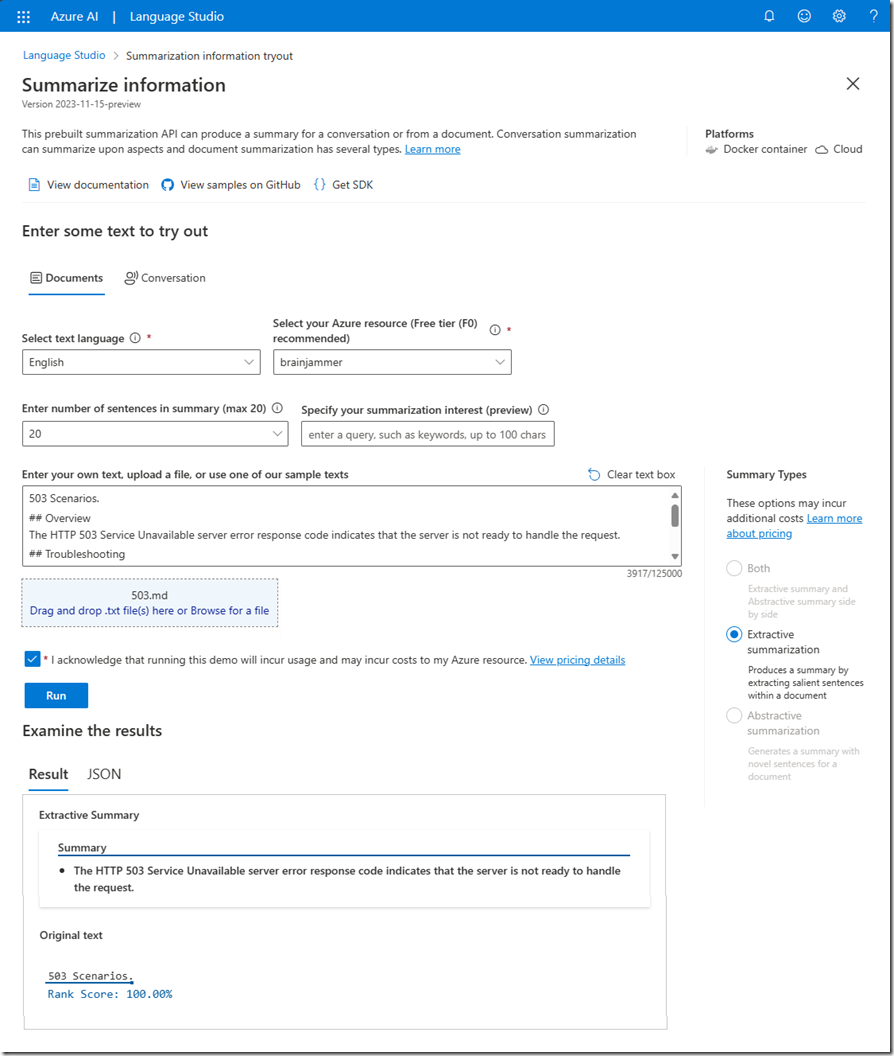

The summarization of the document is very straight forward, you either enter the text content into the text box, or you can upload a file which results in the contents being placed into the text box. Press the Run button to perform the summarization.

Figure 4, Azure Language Studio, Summarize text, Summarize information, run the summarization

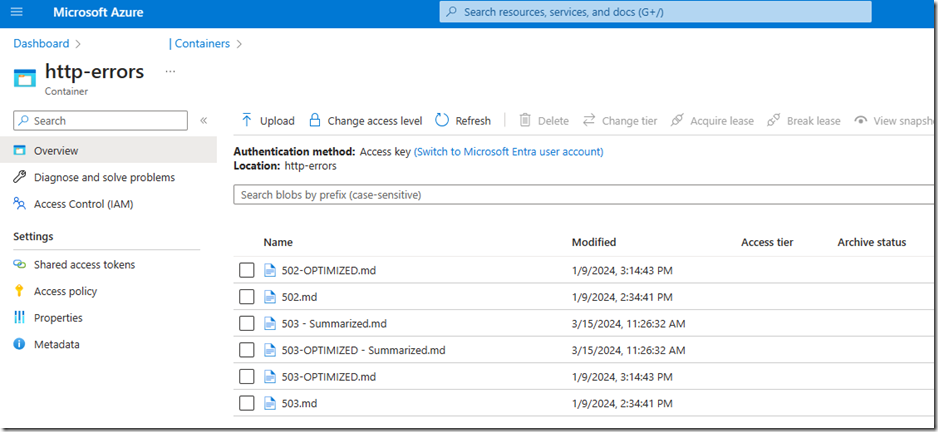

The file that was summarized was a simple Azure DevOps wiki file that contained information about HTTP 503 errors. The file, 503.md, was in raw form and contained all the markdown tags, tables, and white space you would expect to find in a wiki or markdown file. I also did some manual optimization to that file like removing all the tags, white space and other unnecessary content and created a new file named 503-OPTIMIZED.md. I copied the summary output by the action taken in Figure 4 into a copy of both the files and renamed them to 503 – Summarized.md and 503-OPTIMIZED-Summarized.md and uploaded them into my Azure Blog Storage container, as seen in Figure 5.

Figure 5, Azure Blob Storage container storing content for grounding document retrieval

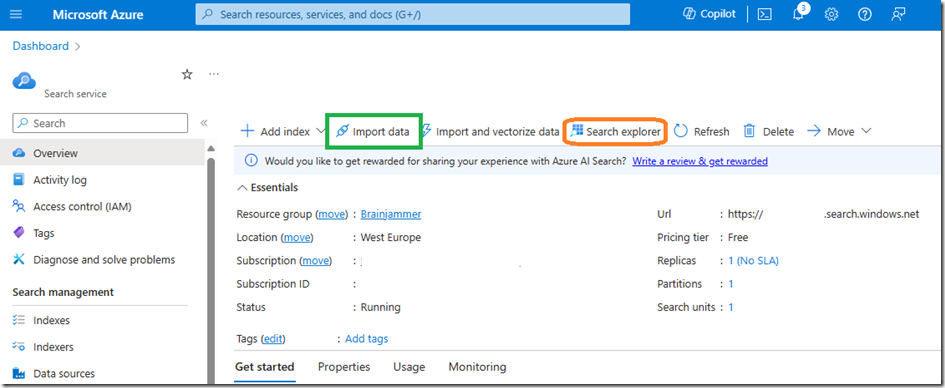

These documents are the ones I ingested into Azure AI Search which is the endpoint used for the discovery of the most relevant grounding documents for a given prompt. To ingest and index the documentation I provisioned, opened Azure AI Search, and utilized the Import data wizard which walked me through the process. Figure 6 illustrates this a bit.

Figure 6, Using Azure AI Search to discover most relevant grounding document for a Retrieval Augmented Generation (RAG) LLM solution.

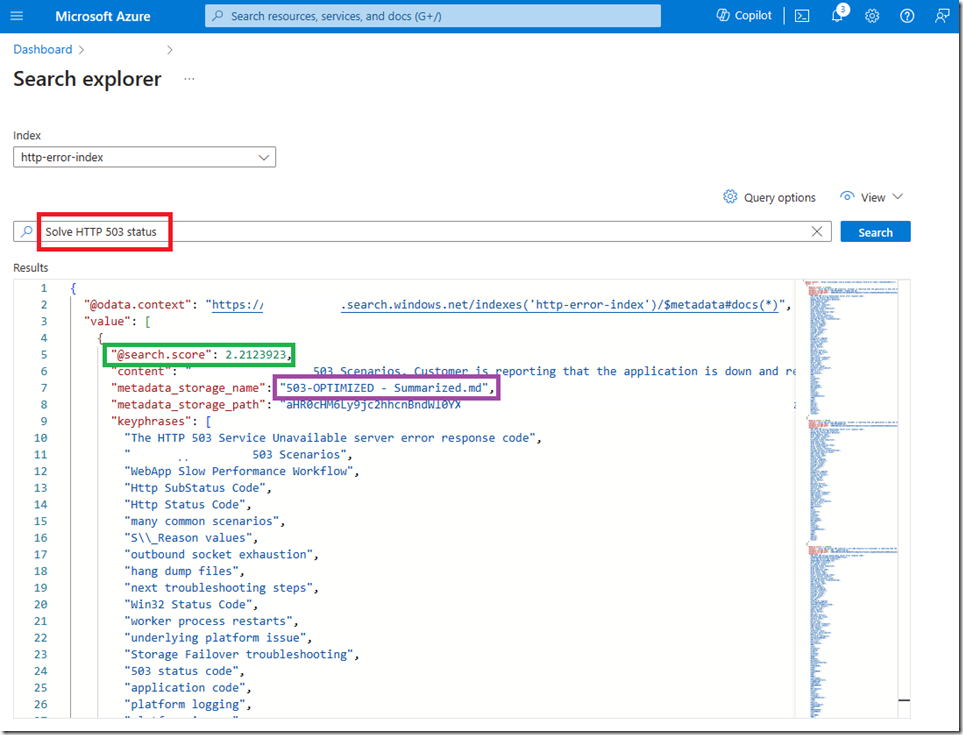

Once the data is indexed you can use the Search explorer to search for the documentation and check the search score, as seen in Figure 7.

Figure 7, checking the search score for grounding document retrieval

Two things I should mention, the first is that the search is result is very dependent on, amongst other things, the other content discoverable in the index and the tokenized prompt used for searching. The other aspect is that the search feature used here is Key Phrase. There are other features like Vector, Hybrid, and Hybrid + Sematic ranker. Depending on which index and search endpoint feature you implement the results may be different. Nonetheless, the outcome was an interesting one as I did find that my content which included a summary of its contents scored higher than those without, as seen in Table 1.

| File Name | Search Score |

|

503-OPTIMIZED – Summarized.md |

2.2123923 |

|

503-OPTIMIZED.md |

2.206798 |

|

503 – Summarized.md |

2.1358426 |

|

503.md |

2.044827 |

Table 1, summarized content search score impact

I am looking forward to more testing with other Microsoft AI service to see how they can be used to further find the most relevant grounding document based on the users prompt. Happy AI’ing.