Because it was there and because I could, well maybe not. It actually wasn’t very difficult because these instructions worked perfectly. Following them worked without a hitch, almost. There are few small hiccups but we made it through without much problem. It was rather time consuming to re-image all those disks for the 8 nodes. I built the original images using the official Raspberry Pi imager. I used the Win32 Disk Imager to create an image, the Raspberry Pi imager only writes. Then I used the official Raspberry Pi imager to write the image I created with the Win32 Disk Imager. The image was 31.2GB and an interesting fact i forgot about is that sometimes a 32GB SD card doesn’t have 32GB, rather somewhere between 28GB and 32GB. It turned out 2 of my 8 32GB SD card had less than the required amount and delayed the project some days due to a reorder. It comes down to the fact that some chip manufacturers use 1000MB (or less) as the value of 1GB, where it should actually be 1024MB as the value of 1GB. The overall project was on the expensive side, I won’t calculate it for you but you can do the math.

| Item | Name | Link | Quantity | Item | Name | Link | Quantity | |

|

Raspberry Pi 4 | here | 9 |  |

USB Power Cables | here | 9 | |

|

Touch Screen case | here | 1 |  |

Raspberry Pi cluster case | here | 2 | |

|

HDMI to HDMI micro | here | 1 |  |

32 GB SD cards | here | 9 | |

![clip_image001[5] clip_image001[5]](https://www.thebestcsharpprogrammerintheworld.com/wp-content/uploads/2020/05/clip_image0015_thumb.png) |

Card reader/writer | here | 1 | ![clip_image001[7] clip_image001[7]](https://www.thebestcsharpprogrammerintheworld.com/wp-content/uploads/2020/05/clip_image0017_thumb.png) |

USB Power Hub | here | 2 | |

|

Power strip | here | 1 |  |

8 Port Switch | here | 1 | |

|

Network cables | here | 10 |  |

Keyboard | here | 1 | |

|

Mouse | here | 1 |

I already had a NETGEAR route, so I didn’t need to get a new one. Since I was part of a small team creating this, it took a bit longer due to scheduling conflicts, but it can all be assembled in 3-4 hours. The imaging of the sever nodes took about 2 hours per disk, since we had only 1 workstation, it was a serial task and took some time. After the successful creation of each server image we booted up the client and the server. We executed these commands.

nmap –sP 192.168.1.*

sudo python3 compute.py

The first NMAP command scanned the network (which was not connected to the internet) to discover the IP addresses of the connected devices. The second command was a Python script that send 16 jobs to the servers on the network. At this point there was only one. Once we knew that node was working, we connected all the other nodes and ran the same commands again. It was clear that it runs faster when there are more nodes in the cluster to execute the jobs.

It isn’t a stretch to get that the more CPUs and memory you have to run a program the faster it will perform. I am confident that I am not stating anything ground breaking, but you might have noticed that the speed of CPUs are not getting faster and Moore’s Law has apparently broken down. One reason, I am sure there are more, has to do with the heat that comes from the transistors running in the computers. There is a cost associated with running servers, electricity and if you have ever walked into a datacenter, you will quickly realized how cold it is. It is no longer cost effective to make CPUs faster because the heat increases with the speed. Which is kind of the reason you are seeing Quantum and other directions being taken versus towards more and faster CPU transistors per second.

No for the why….

There is a massive amount of concepts and complexities which the Cloud is going to abstract away from people, even technical people. Even C#, however complex you might find it has abstracted much of the complexities or programming away. It doesn’t mean they don’t exist, it just means you don’t exposed to them and therefore you don’t know they even exist. You don’t know what you don’t know. What I see is the Cloud is moving in the same direction from an infrastructure and hardware perspective as did programing. Don’t get me wrong, this is a good thing, no actually it is a great thing! it means more people will be able to consume it and create great things, simply because the complexity of doing so has been abstracted away. Again, abstracting away the complexity doesn’t mean the complexity no longer exists! It does, and somebody, actually a lot of people need to understand the internals, or one day the light switch will get turned off and no one will know how to turn it back on. Kind of a scary thought actually….

Anyway, programming an application in C# to run on a server, server pool or web server farm isn’t the same programming paradigm as running a program in a cluster. One of my WHY is to learn this paradigm. I know some about parallel processing on a single machine with multiple processors, but I am fairly certain that working in a cluster doesn’t work that same way. In addition to that, here is a list of other project I will perform with my team.

- Study the scripts which are provided along with the OctaPi project

- Write a few Python programs designed for cluster calculations:

- See how fast I can calculate Pi (3.14) when compared to the same program on a single workstation

- See how fast I can find Prime Numbers when compared to the same program on a single workstation

- Search for Fibonacci patterns in a few stock price fluctuations

- I want to hash a string using MD5 and then attempt to crack it using a rainbow table

- Rebuild the cluster using Ubuntu, Kubernetes and MicroK8

- I want to simulate a Quantum computer and run some simulations on it using Q# and F#, maybe using LIQ⏐〉

- Finally, I want to install Kali Linux or some other toolset like Cyborg Hawk, Black Arch, Parrot Sec or Black Box and test out some hacks. **NOTE: hacking is illegal, even if the damage is unintentional. If you use these tools, make sure the machines exist within an isolated network.

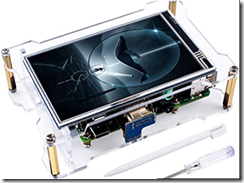

As I progress along with my objectives I will share what I have experienced. It might take months, years, a decade or maybe something I never complete, but at least I got started. Here is a picture of how it looks after being assembled and without the disk images. Once I get that done I’ll likely post another. Have a good one!