I worked on this issue and wanted to write it down because I love it when I can execute the WinDbg commands in my “Must use, must know WinDbg commands, my most used” article and find the reason for the problem.

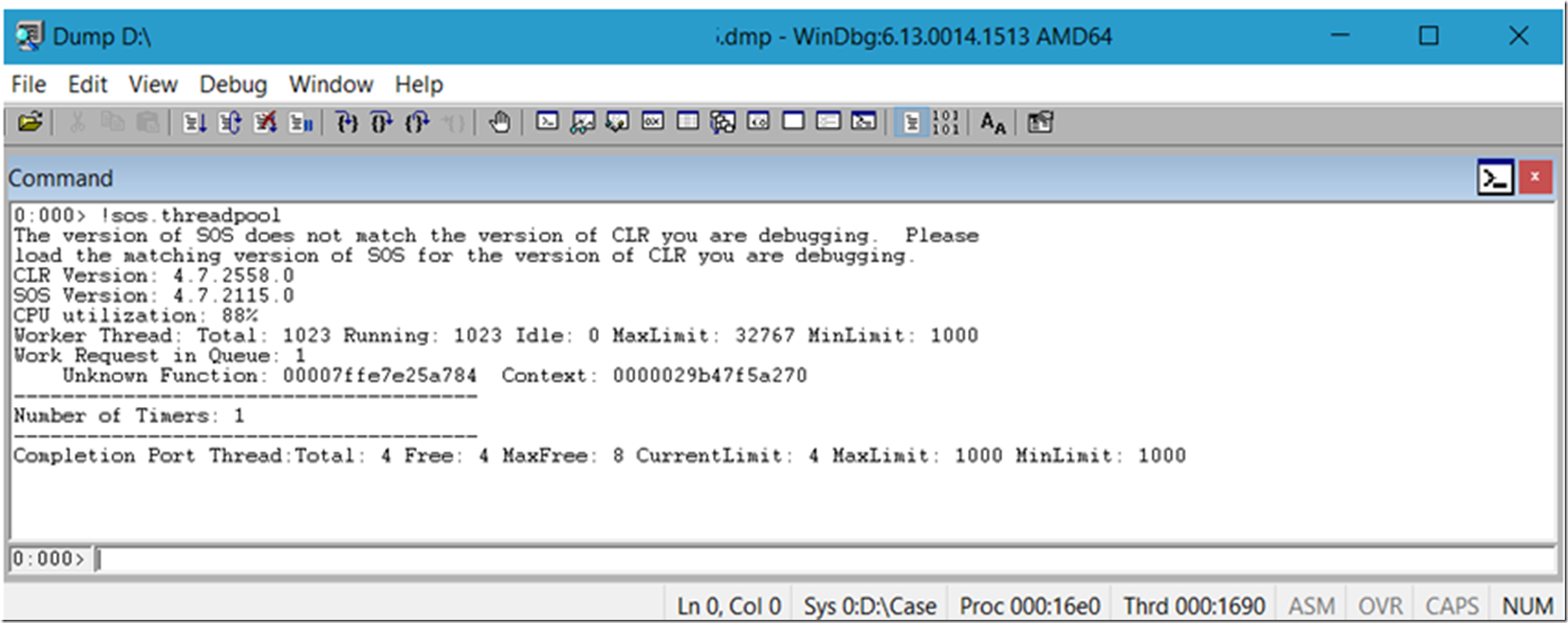

I executed !sos.threadpool and did see that the CPUs were pretty high, as seen in Figure 1.

Figure 1, Redis Cache, Azure App Service slowness

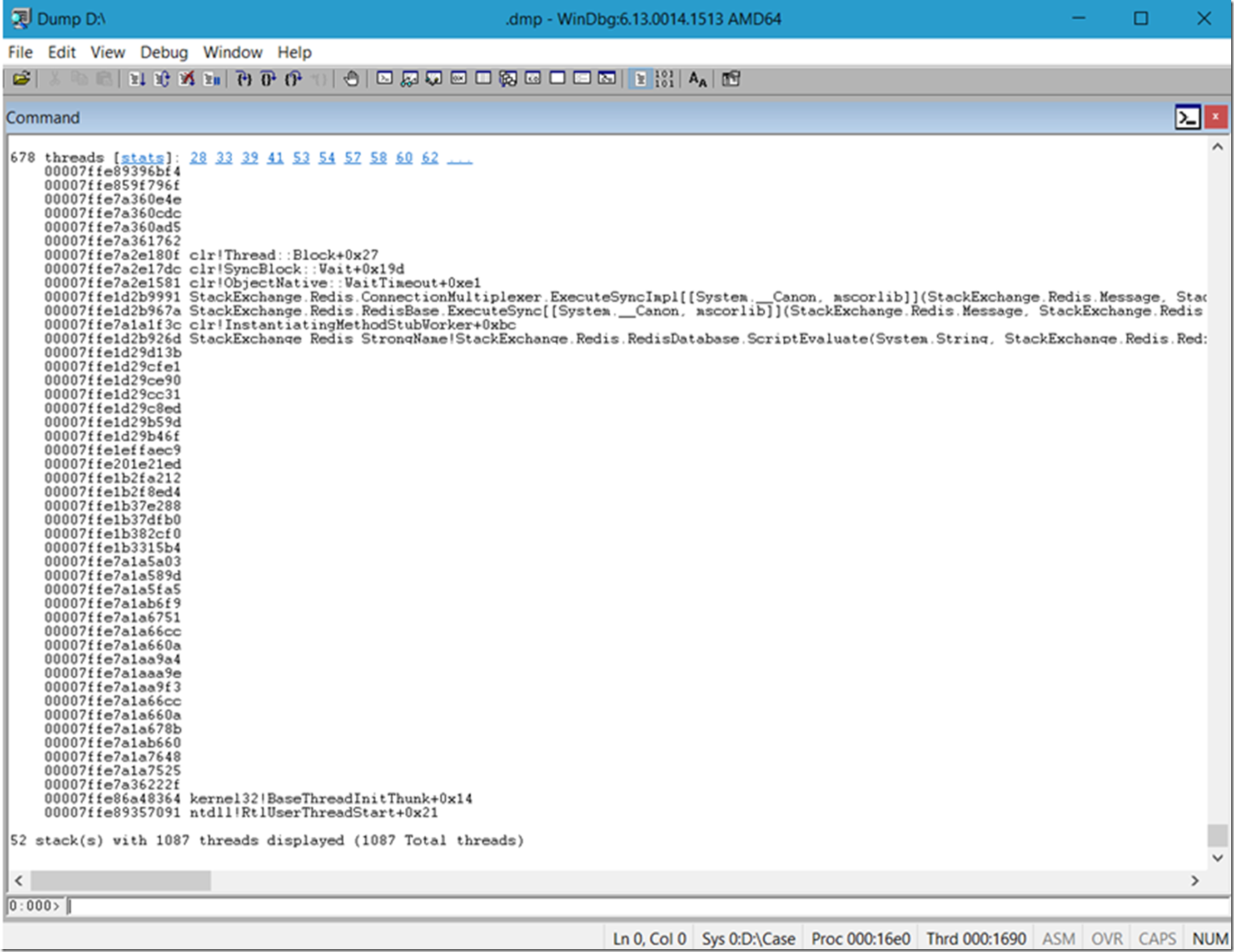

Remember that the percentage rendered for CPU utilization is that of the machine and not the process. Next I executed the almighty !mex.us and the issue became pretty apparent as you can see in Figure 2.

clr!Thread::Block+0x27

clr!SyncBlock::Wait+0x19d

clr!ObjectNative::WaitTimeout+0xe1

StackExchange.Redis.ConnectionMultiplexer.ExecuteSyncImpl[[System.__Canon, mscorlib]]()

StackExchange.Redis.RedisBase.ExecuteSync[[System.__Canon, mscorlib]]()

Figure 2, Redis Cache, Azure App Service slowness

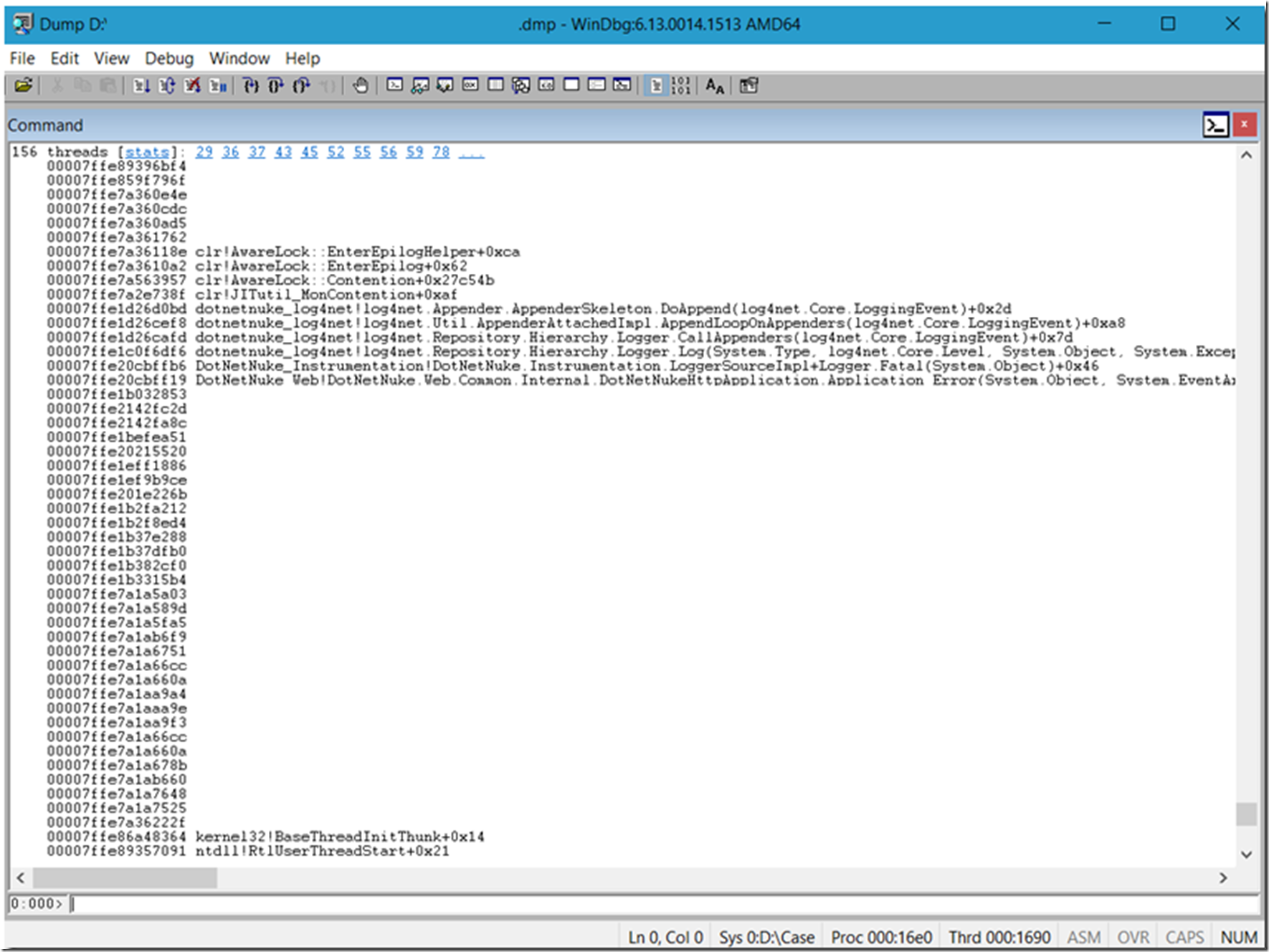

It did look like Redis Cache was doing something as it is seen on 678 threads and the fact that there is a method called Block() should signal some contention. Looking further at the output of !mex.us I found that there was an exception being thrown and logged via log4net. See Figure 3. I wrote an article about “How to configure log4net on Azure App Service” here.

clr!AwareLock::EnterEpilogHelper+0xca

clr!AwareLock::EnterEpilog+0x62

clr!AwareLock::Contention+0x27c54b

clr!JITutil_MonContention+0xaf

dotnetnuke_log4net!log4net.Appender.AppenderSkeleton.DoAppend()

dotnetnuke_log4net!log4net.Util.AppenderAttachedImpl.AppendLoopOnAppenders()

dotnetnuke_log4net!log4net.Repository.Hierarchy.Logger.CallAppenders()

dotnetnuke_log4net!log4net.Repository.Hierarchy.Logger.Log()

DotNetNuke_Instrumentation!DotNetNuke.Instrumentation.LoggerSourceImpl+Logger.Fatal()

DotNetNuke_Web!DotNetNuke.Web.Common.Internal.DotNetNukeHttpApplication.Application_Error()

Figure 3, Redis Cache, Azure App Service slowness, Log4net

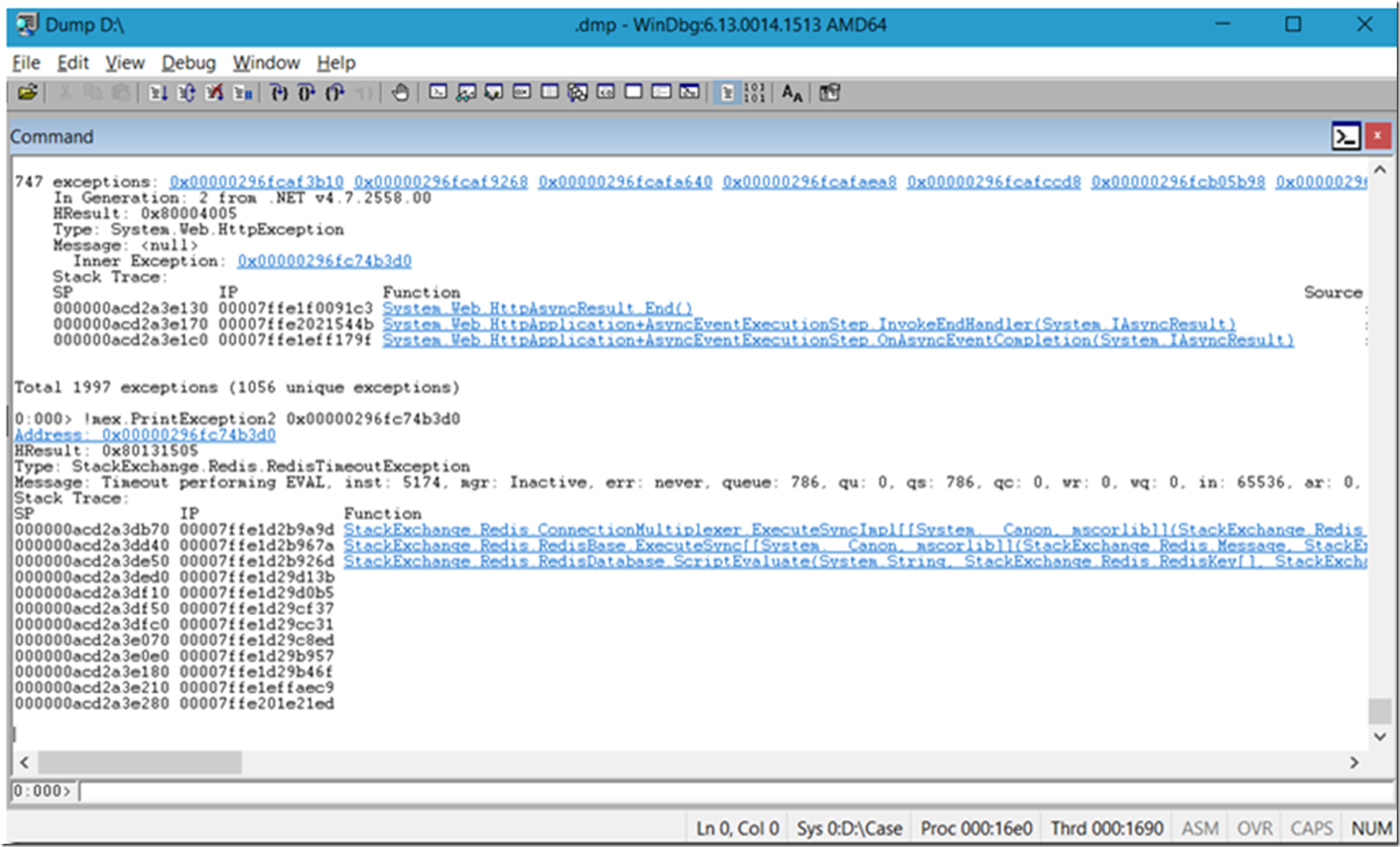

So I dumped out the exceptions using !mex.dae and found the following shown in Figure 4. There were 747 System.Web.HttpExceptions. Executing then !mex.PrintException2 on the InnerException, resulted in the following, see Figure 4.

Type: StackExchange.Redis.RedisTimeoutException

igure 4, Redis Cache, Azure App Service slowness, Log4net

So, we disabled Log4net and were able to move onto to the next issue that the customer was simply trying to load too much into the cache all at once. Loading into Redis Cache takes some compute power and you can get some contention on the 4 CPU machines running the App Service.

Extra note

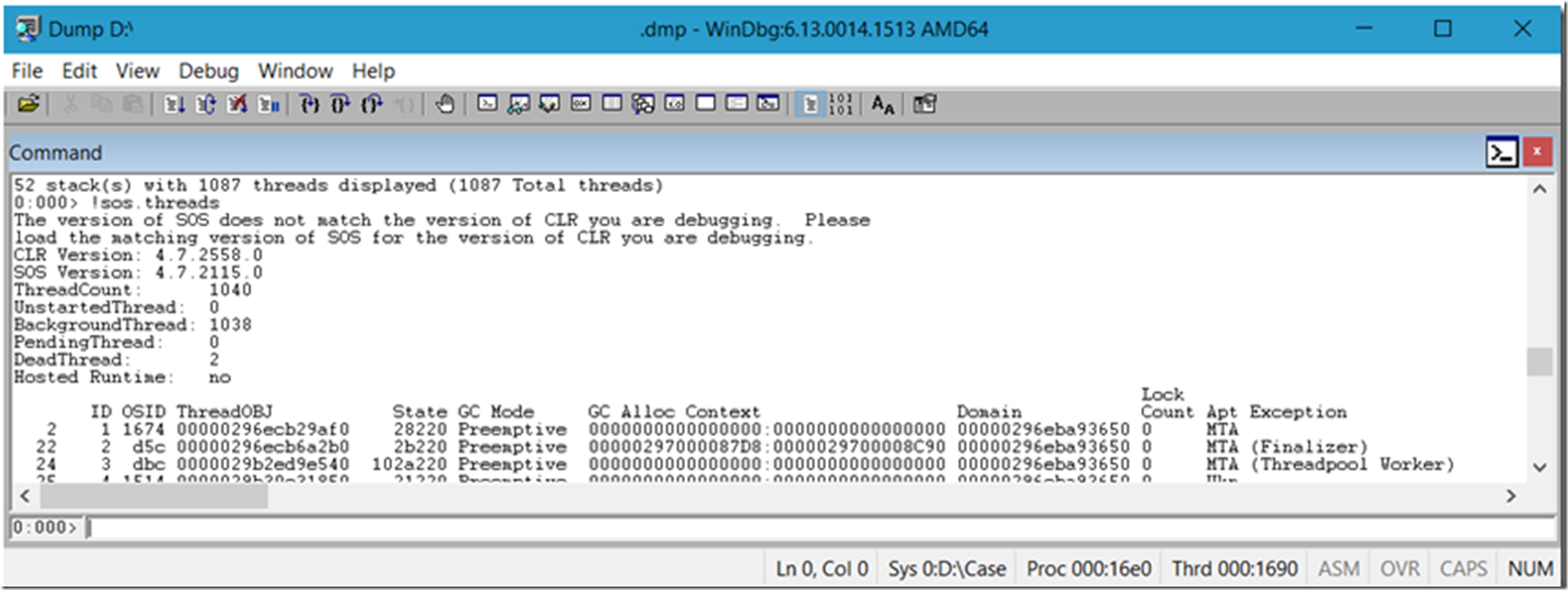

If you see in Figure 1 where I ran !sos.threadpool, look at the thread MaxLimit is set to 1000. I ran !sos.threads, Figure 5 and you can see 1040 threads are running. You might be eager to simply increase the threads, but I would expect this wouldn’t have solved the problem because the threads were locked, it likely would have just made it worse, but still visible.

Figure 5, Redis Cache, Azure App Service slowness

In my IIS debugging lab series, specifically, “Lab 7: Integrated versus Classic mode” here, I call out the processModel(*). This is the location you would set the limits for the thread pool.