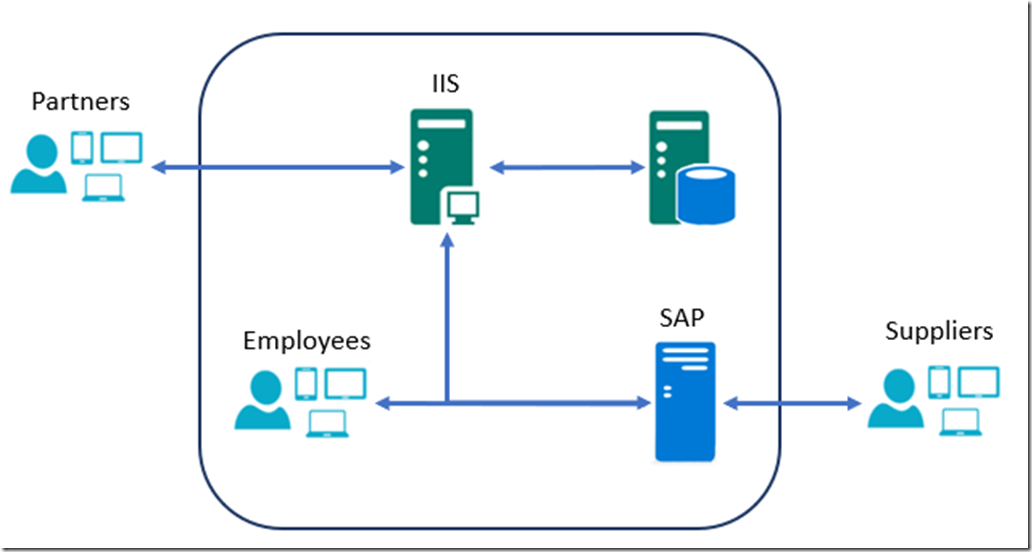

I am a fan of decoupling the different components of a transaction to the point where is makes sense. What does decoupling mean? When I first started coding and building IT solutions, I can’t say the idea of decoupling existed at all. When I created an order entry system using Active Server Pages (ASP) and Component Object Model (COM), ADODB on IIS 4.0, everything required to validate and store the details of the order was performed in real-time. The infrastructure required for that spanned 3 tiers, all of which had to be available and responsive in order for the order to be successful. The connectivity between the client and the web server, the connectivity between the web server and the database and the connectivity between the web server and the ordering and logistics service which we ran on SAP. Figure 1 illustrates how that looked.

Figure 1, tightly coupled computing system

The flow would be that the client would enter the details in the web browser, there was a little bit of JavaScript to perform some validation, client side, but most happened server side. Once the details were entered the order button was pressed. The web server received the details and validated them. If all went well, the information was checked against data stored in the database, data is written to the database, and again, if all went well, the order was then officially placed into the SAP ordering system that managed the finance and logistics of the order processing. There are numerous points in the order flow which could have failed during transaction and, in case of error, getting a helpful exception back the client which also included how to proceed, wasn’t very easy. Not to mention cleaning up any data which was stored in the database, but didn’t make it to SAP. The most important part was to get a message back to the client which provided confirmation or details about any problems with next steps for solving them.

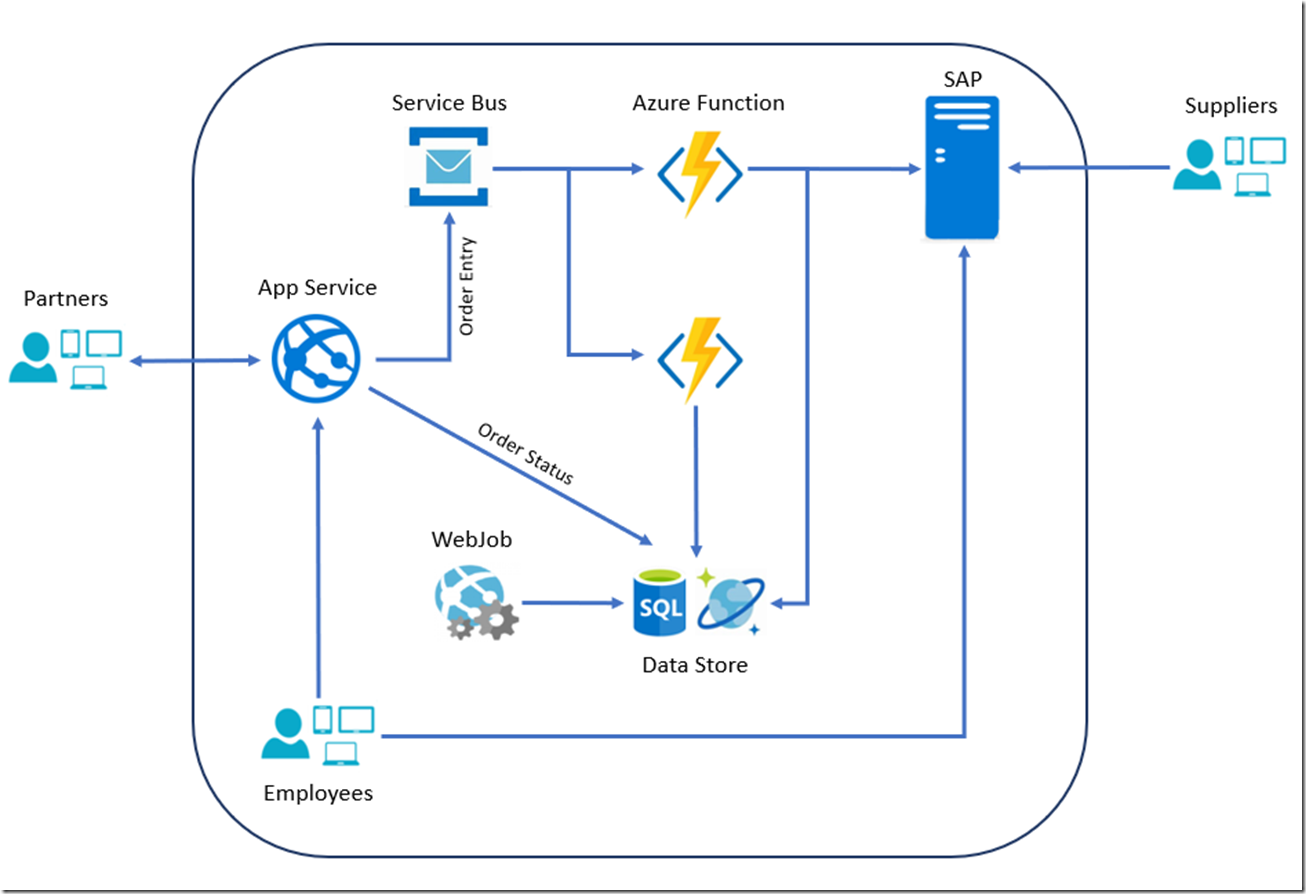

I have now become a fan of decoupling, ever since I started understanding it and learning ways to implement it. At the time of the solution stated above, there existed Electronic Data Interchange (EDI) which could have been used to decouple the real time reliance on backend services, but it wasn’t something many people understood. The concept of EDI has progressed through XML and now to JSON, perhaps some YAML, where there exists an agreed format of the data being sent between two or more computer systems. In a decoupled solution those “documents” are stored in a secured location and then processed, not in real-time aka offline. If I were to architect the same system shown in Figure 1 today, I would do it like this, as seen in Figure 2. Please note that I work at Microsoft, specifically on the Azure App Service / Functions team, so the selected products are the ones I know.

Keep in mind that the cost and complexities to achieve the last few percent of availability and redundancy are typically the most complex and expensive. What I mean is that the solution in Figure 1 would typically have achieved an availability target of between 95% – 98% and moving that to 98% – 99%+ is hard and has historically taken lots of time, money, complexities and maintenance. Calling out ‘historically’ here, it’s not so costly using some Azure products these days.

Figure 2, the decoupling of a tightly coupled computing system

Is 95% – 98% enough, these days not! A means for increasing availability is by decoupling. In my experience, it is very, very unlikely that an insertion of a message (aka an order) into a Service Bus will fail, here is some information about the Service Bus SLA, it’s 99.9% guaranteed. That would result in the client, aka partners and employees always getting a successful response in real-time. That doesn’t mean that the order will be successfully placed into SAP and/or into the database, but it means that the order has been stored and can now be processed and managed offline. It means your customers, partners, employees and/or suppliers are happy, which is of extreme importance! It also releases the human resource from any additional potential real-time follow-up action, which allows them to be more productive by being able to move onto their other pressing activities. It’s not really prudent to go into the nitty gritty of the final solution in Figure 2 because this article is not about the actual solution, rather it’s about decoupling. I hope you found this information useful.

The reason I wrote this article comes from a question I received from a colleague of mine. A customer wanted to run a workload on an Azure App Service and needed to make a modification to the machine.config file to allow the execution to run up to 10 minutes, perhaps even longer. For one thing, App Services are for responding to HTTPS requests, web sites shouldn’t be expected to maintain some kind of static connection for long periods of time. Another thing is that you cannot make changes to the platform when running in PaaS, per definition, so changing the machine.config configuration isn’t a supported activity. Without knowing much details about the requirements I recommended running whatever is taking that 10 minutes, using a WebJob, which is a background processing capability that runs on the App Service PaaS platform. The final point is that many make the decision between running their IT solutions on PaaS or on IaaS based simply on what is supported and not supported on those two cloud offerings in isolation. There are great differences in support and maintenance responsibilities between those platforms which require some discussion, decisions, analysis and cost modeling. What I mean is, the decision has more perspectives to discuss than simply basing the cloud platform target type on what supports what, because you can break it down more granularly than that, as I showed in Figure 2.

Remember what I wrote at the beginning of this post that you should “decouple to a point where it makes sense”. Try that, at least consider other solutions before you decide to choose IaaS simply because you cannot change a value in the machine.config file!