It wouldn’t be prudent to divulge the intellectual property (IP) details of how Azure App Services are configured in detail, but, right or wrong, I like to point to Azure Pack Web Sites (*) as the building block of Azure App Services. However, keep in mind that Azure Stack is a more recent version of an Azure Pack-like product and that Azure App Service has and does continue to evolve beyond what you will see in that APWS article I linked to. You can learn how Azure App Service works some from looking at APWS.

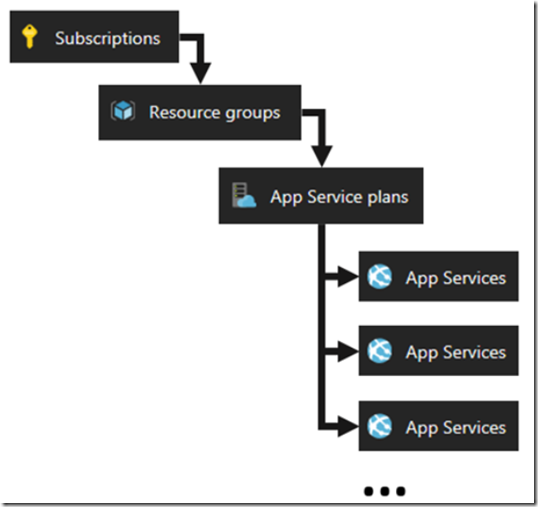

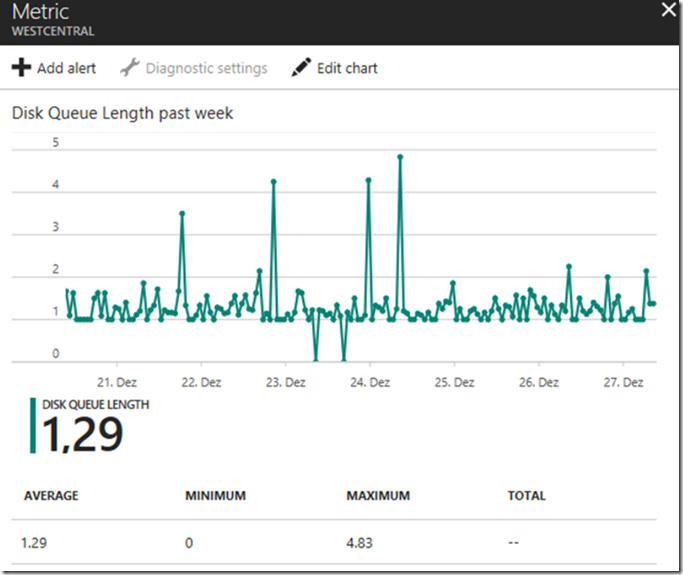

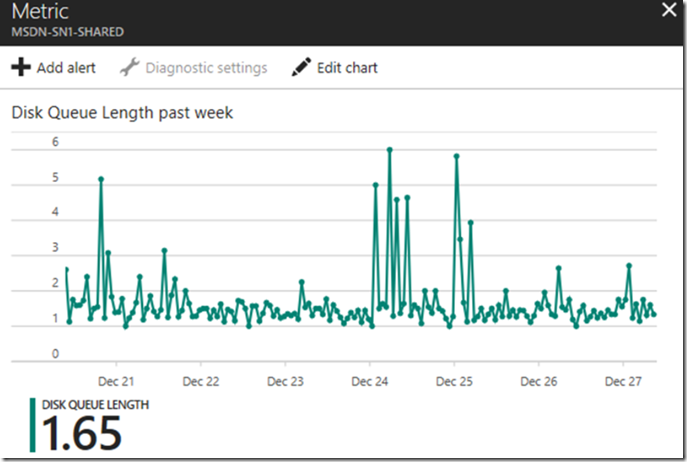

The basis of this article is to point out what is and what is not a Disk Queue Length problem within the context of an Azure App Service. Keep in mind that this metric (Disk Queue Length) is for the App Service Plan (ASP), see Figure 1, and not the App Service, so any one of the App Services running on the ASP could be the culprit and not necessarily the one which you find is behaving in an unexpected manner. It may be the cause, it may simply being impacted the most by high Disk Queuing or the Disk Queueing has nothing to do with the unexpected behavior. I wrote about an Azure App Service Plan (ASP) here.

Figure 1, Azure Subscription, Resource Group, App Service Plan and App Service relationship graph What is a normal, acceptable disk queue length? I read here that if the “Average Queue Length exceeds twice the number of spindles, then you are likely developing a bottleneck.” I interpret ‘spindles’ to mean a physical or logical disks. * The reason I wrote the first paragraph in this article is because I will not personally write/describe in detail how the drives are configured because is it IP.

Based on the definition, if I have 1 physical or logical disk and my average Disk Queue Length is <= 2, then there shouldn’t be significant concern. I will share this, IP or not, that there is at least 1 physical or logical disk working per ASP.

The #1 question is, what behavior are you experiencing that makes you concerned about the Disk Queue Length? Slowness? An exception? Unavailability? Locking? If this is known, then it will help trace down the issue better which may lead the investigation to DQL, but maybe not. Point is, in addition to looking at a metric and then trying to link it to some problem, work it from the other direction as well (link the problem to the metric and the metric to the problem).

*If you already know that your App Service will have HI I/O, then consider LOCAL_CACHE, described here. Read the entire article and understand the complete behavior before implementing it. Also, if you have HI I/O consider using an Azure Storage Container via the SDK.

In closing I have 2 points.

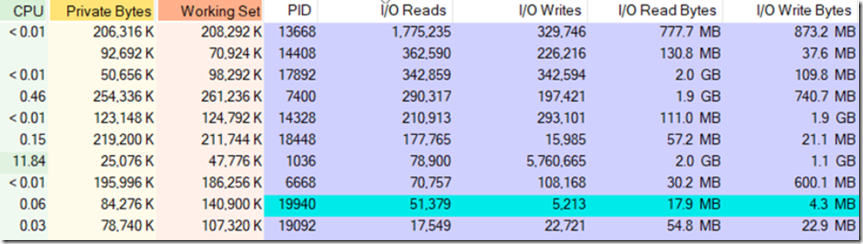

The first one is that I have read numerous articles that state anything over a 0 queue length deserves investigation and some analysis into the reasons for the disk queueing, and I fully respect that. I would pursue finding the reason for the queuing if the application is showing signs of being impacted by it. You can find and trouble shoot disk queue consumers using Process Explorer, as shown in Figure 2.

Figure 2, Disk Queue Length on Azure App Service

* We know that with an App Service the customer has no capability to run Process Explorer on their App Service Plan. If you need that level of access then you would need to run on an Azure VM. That is why if you believe your App Service is impacted by high Disk Queue Length that you search for the encountered symptoms as well, and confirm those symptoms can be linked to a high disk queue length. Also, if you already know that one or more of the App Services running on the App Service Plan reads/writes a lot then you might already know how to solve the issue without contacting support.

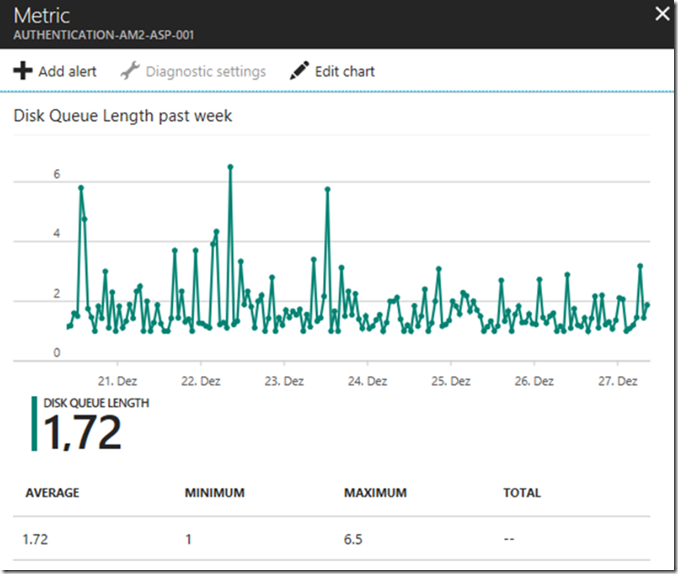

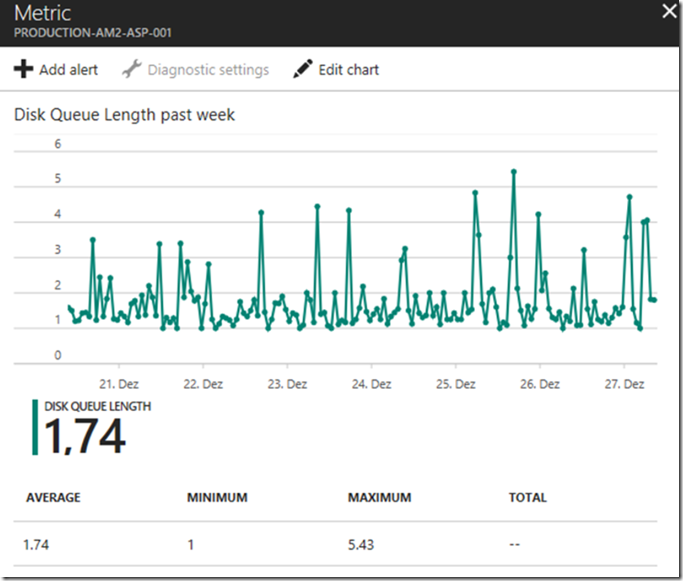

The second is, I took some shots of a few of my ASPs and although they show an average of ~1.75, I have no outage, latency or concerns about this metric being at this level. I might consider placing an alert after monitoring it and seeing what a ‘normal’ level is for my App Service Plan over some months. For example, if my App Services are all running fine for 3 months and I see an average of 3, then I would place an alert someplace between 6 or 7. Keep in mind that when we patch the machine running your ASP, you might get an alert.

Lastly, if you add new App Services to your App Service Plan, I would recommend that you review the alerts and make any appropriate changes.

Figure 3, Disk Queue Length on Azure App Service

Figure 4, Disk Queue Length on Azure App Service

Figure 5, Disk Queue Length on Azure App Service

Figure 6, Disk Queue Length on Azure App Service

HTH